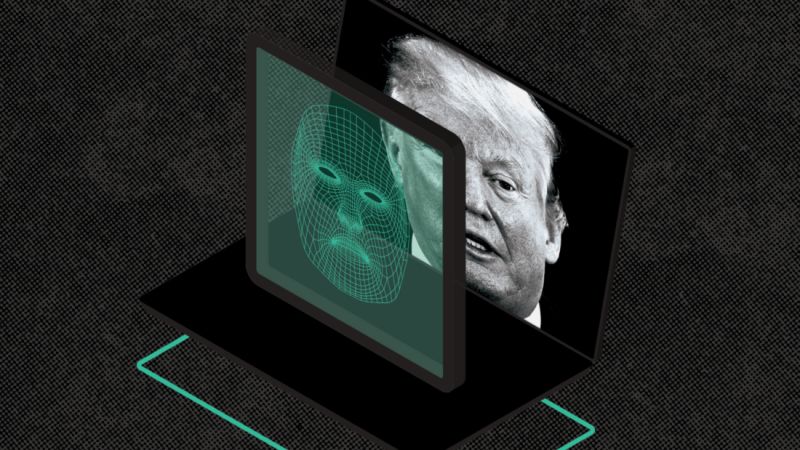

Tech companies have come together to form an accord aimed at

limiting the impacts of deepfake AI content online. With the rapid advancement

of artificial intelligence, there is a growing concern about how it could

potentially disrupt democratic elections through the spread of misleading or

manipulative content.

Representatives from major tech platforms like Google, Meta,

Microsoft, OpenAI, X and TikTok agreed to implement reasonable precautions

through a new pact called the "Tech Accord to Combat Deceptive Use of AI

in 2024 Elections". The accord focuses on seven key elements - including

monitoring for AI-generated deepfakes, developing technical detection methods,

and responding to incidents in a timely manner.

The accord's focus on monitoring, detection, and timely

response helps establish a framework for collaboration between tech companies.

But proactively addressing perception may prove just as important as reactive

measures.

Platforms' respective SMM panels could help through

initiatives like media literacy programs educating users on discerning online

information. Fact-checking partnerships could also aid in preemptively

debunking viral deepfakes before widespread dissemination.

Detection methods will also need continuous refinement as

generative AI models advance. Techniques focused solely on analyzing individual

words may fail against future deepfakes crafted to appear seamlessly

conversational and contextually consistent. Holistic approaches leveraging

things like atypical sentence structures or logical inconsistencies may fare

better at differentiating human vs AI-generated content.

There are also open questions around how to balance free

expression with limiting harm. Overly broad content restrictions risk censoring

legitimate political satire or parody. Meanwhile, a hands-off approach leaves

deepfakes' influence unchecked. As a democratic solution, platforms might

consider labeling potentially misleading deepfakes while still allowing their

spread. This adds transparency without outright bans that could set concerning

precedents.

Geopolitical factors further complicate global responses.

Regulations in some nations may curb deepfake proliferation domestically but

displacement effects could see problematic content shift primarily to platforms

prioritizing free speech. International cooperation will be needed to establish

baseline standards while respecting diverse legal frameworks.

Developing technical solutions is also challenging given the

"arms race" dynamics between generative AI and detection methods.

Offensive tools will likely continue outpacing defensive counterparts for the

foreseeable future. Comprehensive strategies combining technical, educational

and policy-based approaches seem most viable for mitigating risks amid such

rapid technological change.

Moving forward, sustained cooperation through initiatives

like the new accord will remain crucial. Platforms' SMM panels provide an

important venue for regularly evaluating detection capabilities, sharing latest

research, and discussing collaborative responses to emerging issues.

Civil society too has a role via accountability mechanisms

that assess accord implementation and propose reforms to strengthen public

protections. With open dialogue and commitment to progress, the risks of

deepfake AI can continue to be limited for election integrity and democratic

discourse.

For businesses seeking to navigate these complex

technological shifts, expert guidance is invaluable. Great SMM provides

full-service SMM management to help companies leverage online channels

responsibly and maximize their impact. Through strategic planning, creative

content development, and analytics integration, we ensure marketing stays

focused on your goals while respecting community standards. Contact us to

discuss how our team can boost your brand in a compliant, considerate manner.