Algorithms in social media have become a big part of our

online lives, affecting what we see and who we connect with. But an important

question comes up: Do these programs unintentionally help create echo bubbles

and make people more divided?

Echo chambers are settings in which people mostly hear

information, ideas, and points of view that support what they already believe

and like. Algorithms in social media are based on how much users interact with

each other and how much money they make from ads.

They are made to look at user data and offer personalized

content. This personalized method can improve the user experience by giving

them material that is relevant to them, but it can also have effects that were

not meant.

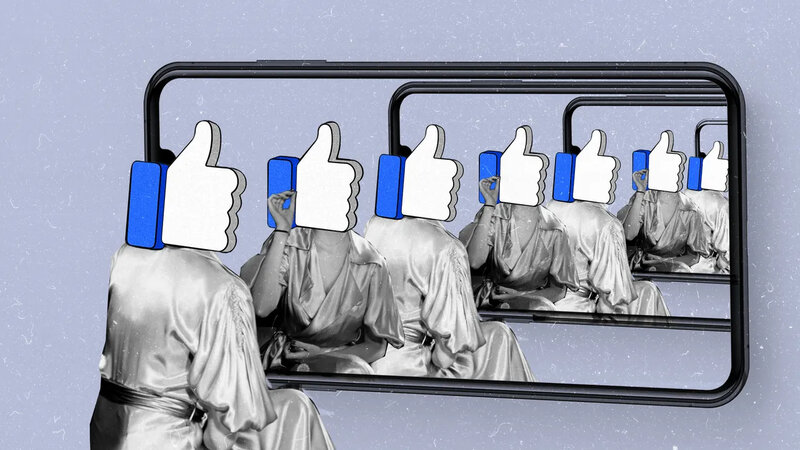

As social media algorithms learn from how people use them,

they prefer showing related material to what people have already liked. This

makes a "filter bubble" where people only see a small number of

points of view, which reinforces their own views and ideas. In the end, people

may find themselves in echo bubbles, surrounded by people who think, value, and

believe the same things as them.

There are big problems caused by sound boxes. When people

only hear one side of a problem, they may develop a skewed view of the bigger

picture and fail to think about other points of view. This can make people even

more set in their own views and less willing to have useful conversations or

look for common ground.

The fact that social media sites are run by algorithms also

helps spread material that divides people. Posts with a lot of drama or

controversy tend to get more likes and comments, which makes algorithms

highlight and spread that kind of content. This can make it easier for people

with strong views and words to get heard, which can make society even more

divided and hostile.

Also, the computers that run social media can accidentally

make confirmation bias worse. Confirmation bias is the tendency to look for and

understand information that backs up what you already believe, while ignoring

evidence that goes against what you already think.

By showing users material that matches their tastes,

algorithms unknowingly strengthen confirmation bias. This keeps people from

hearing different points of view and makes it harder for them to think

critically.

Echo chambers and division on social media sites need to be

dealt with in more than one way. Platforms can do a lot to promote variety in

content by using algorithms that give more weight to a wider range of points of

view. Putting in place ways for people to see material that is outside of their

comfort zones can help break the filter bubble and lead to more complex

conversations.

It's also important to be honest. Social media sites should

explain how their algorithms work and how they affect the way material is

shared. Users should be able to adjust their algorithmic experience by choosing

to see a wider range of material. This would help them get out of echo bubbles

and interact with more ideas.